AI Agent Governance: What to Prepare for as AI Enters Your Stack

AI agents are here, and they’re not just suggesting actions. They are taking actions as well, on their own! The question is: who’s governing them?

An operational intelligence layer, the ontology, models relationships of entities and actions, enabling “digital twins” for organizations.

Table of Contents

Philosophically, ontology means the study of being. Does ‘nothing’ exist? Does ‘God’ exist? Do ‘I’ exist? To articulate metaphysical questions like these in a formal way, philosophers came up with a structure of rules and categories they can group entities into.

In data science and AI, ontologies are similarly formal and explicit specifications of concepts (like a vocabulary) and their relationships within a specific domain (like a network or blueprint).

Specifically, an ontology should have these three nailed down to ensure logical consistency for AI agents:

These definitions help algorithms understand the real-world environment they operate in, and make multi-agent communication more effective.

What makes an ontology powerful compared to a traditional database is how it formally models the relationships between entities and the actions they can perform. This unified model creates a “digital twin” of an organization that can provide insights, execute tasks, then update itself based on the results.

The main utility is in replacing siloed systems with a single, intelligent operational layer on top of an organization.

Let's take two examples from different industries, a General Hospital and a Supply Chain scenario:

Hospital: The ontology would define objects like Patient, Doctor, Nurse, Bed, OperatingRoom, Medication, Diagnosis, MedicalImage, LabResult. Relationships would include Patient has_Diagnosis, Doctor treats_Patient, OperatingRoom has_Schedule.

Supply Chain: Objects could be Product, Supplier, Warehouse, Shipment, CustomerOrder, BillOfLading, InventoryLevel. Relationships: Supplier provides_Product, Shipment contains_Product, Warehouse stores_InventoryLevel.

Hospital: This layer would model actions and processes like AdmitPatient, ScheduleSurgery, DischargePatient, AdministerMedication. A workflow for "Patient Admission" might involve checking Bed availability, assigning a Doctor, and ordering initial LabResults.

Supply Chain: Actions include PlaceOrder, FulfillOrder, DispatchShipment, ReceiveShipment, UpdateInventory. A process for "Order Fulfillment" would link these actions, triggered by a CustomerOrder.

Hospital: A new LabResult (data event) for a Patient updates the patient's object in the ontology. If the LabResult indicates a critical condition, this could trigger an alert (action) to the assigned Doctor through an application built on the ontology. When a Patient is discharged (action), the status of their assigned Bed (object) changes from "occupied" to "available" in real-time, updating dashboards for bed management teams. The system can track how long Patients with a certain Diagnosis typically stay, and if a Patient deviates, it might flag it for review. This is capturing decisions/outcomes for learning.

Supply Chain: A Shipment (object) being delayed (event) updates its ETA. This delay dynamically recalculates the potential impact on CustomerOrder fulfillment and InventoryLevel at the destination Warehouse. The system can then recalculate its impact on fulfillment and alert a manager, who can then take corrective action. The results of this action are fed back into the system, creating a closed-loop process for handling disruptions.

Core capabilities:

To be the central nervous system of an enterprise, providing:

These capabilities are also the main value proposition of the ontology for any organization.

In practice, this means that:

We need a concrete, machine-readable set of files and databases. Not a diagram on a whiteboard. Not just a system prompt.

The agents interact with the ontology through APIs and tools. They don't get a 50-page PDF explaining it.

Method 1: Tool-use and API calls (most common)

This is the primary mechanism. Each agent in a multi-agent system is given a set of "tools" it can use. Several of these tools are specifically designed to interact with the knowledge graph.

PREFIX ont: <http://mycompany.com/ontology#>

SELECT ?part ?stockLevel

WHERE {

?part a ont:Component .

?part ont:hasStockLevel ?stockLevel .

FILTER(?stockLevel < 10)

}

The agent's LLM brain is instructed to generate this query based on the natural language request, "Find all components with stock levels below 10."

PREFIX ont: <http://mycompany.com/ontology#>

INSERT DATA {

ont:PurchaseOrder_987 a ont:PurchaseOrder .

ont:PurchaseOrder_987 ont:forComponent ont:Part_ABC .

ont:PurchaseOrder_987 ont:hasStatus "Placed" .

}

Now, all other agents can see that this part is on order.

Method 2: Ontology-informed system prompts & context

While the entire ontology isn't stuffed into the prompt, a relevant subset of it is.

"You are a Logistics Orchestration Agent. Your goal is to ensure the timely delivery of Shipments. You can interact with objects like Shipment, Warehouse, Carrier, and Route. Your available tools are get_shipment_status(shipment_id), calculate_optimal_route(origin, destination), and update_shipment_eta(shipment_id, new_eta)."

Method 3: Embedding and RAG (Retrieval Augmented Generation)

For very large ontologies, the relevant parts of the schema (the definitions of objects and relationships) can be embedded into vector space. When an agent has a query, it first does a similarity search on the ontology's documentation to find the relevant classes and properties. This retrieved context is then added to its prompt to help it formulate the correct API call or graph query. This is how it connects to a "knowledge base" representing the whole structure.

The ontology itself is the map. An agent understands its position by knowing its:

Yea, the operational layer needs to be fed by a reliable source of data. Because ontologies are highly complementary to modern data methodologies like the Data Vault, we should take a quick look how they’re seated in the architecture compared to each other:

| Data Vault | Ontology | |

| Primary goal | Store and integrate historical data. | Activate data for real-time operations. |

| Layer | System of Record / Integration Layer. | System of Engagement / Decision Layer. |

| Time focus | Historical, point-in-time, auditable. | Real-time, current state, dynamic. |

| Core question | "What was true?" | "What is true now, and what should we do?" |

| Technology | Typically relational databases (Snowflake, BigQuery). | Typically graph databases (Neo4j, Neptune). |

| Represents | Raw, integrated facts with history. | High-level, semantic business concepts. |

| Use case | Business Intelligence (BI), reporting, analytics. | AI-driven automation, operational apps, semantic search. |

So, while the Data Vault archives the complete historical truth, the ontology can be used to activate the relevant parts of that truth for immediate action.

While many companies using these systems are secretive, there are some tangible examples.

1.) Airbus Skywise:

2.) Financial services (AML - Anti-Money Laundering):

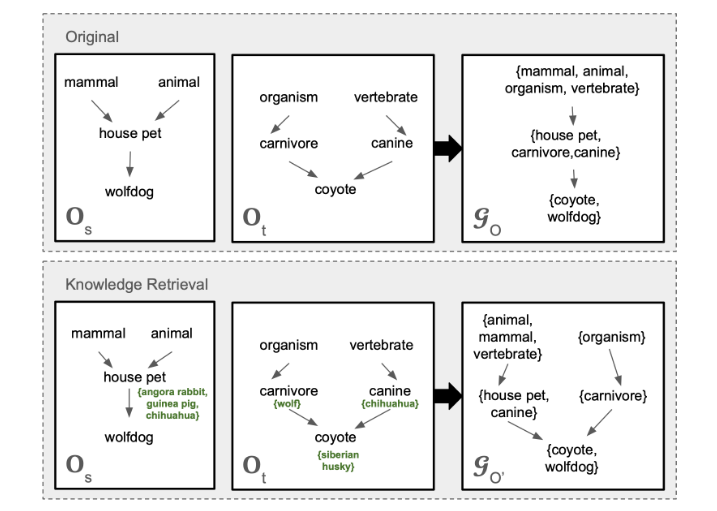

Paper title: KROMA: Ontology Matching with Knowledge Retrieval and Large Language Models

Arxiv link: https://arxiv.org/pdf/2507.14032

So, what if you have clashing definitions within your processes? What if your main ontology calls it a ‘car’, but one of your databases uses the term ‘automobile’?

Traditionally, you’d need handcrafted rules to sort this out, but those do not generalize well.

That’s why automatic ontology matching is a breakthrough method, enabling you to resolve any and all of these discrepancies, making ontologies themselves much more robust and practically applicable.

The implementation is a tiered system:

The ambition is to move beyond siloed data and applications to a truly integrated, intelligent, and responsive operational environment. The success of this model across diverse problems (from healthcare to energy to manufacturing) shows us that actions become really powerful when integrated with deep semantic understanding.